- Web Scraping Tutorial

- Web Scraping Reddit Python

- Reddit Web Scraping Jobs

- Web Scraping Reddit Free

- Web Scraping Reddit

In this part of our Web Scraping – Beginners Guide tutorial series we’ll show you how to scrape Reddit comments, navigate profile pages and parse and extract data from them. Let’s continue from where we left off in the previous post – Web scraping Guide: Part 2 – Build a web scraper for Reddit using Python and BeautifulSoup. Reddit scraping involves the process of using computer programs known as web scrapers to extract publicly available data from the Reddit website. These tools were created in response to the limitations you are bound to face when using the Reddit official API. When using a Reddit scraper, you have to be aware that Reddit frown at its usage. Then you are at right place. Worth web scraping services have experience in scraping Reddit. We often scrape Reddit data. Reddit is a network of communities based on people’s interests. It is an online platform where a group of people meets to talk to one another, share ideas and stuff like links, text posts, images, etc. And discuss matters.

Price Scraping involves gathering price information of a product from an eCommerce website using web scraping. A price scraper can help you easily scrape prices from website for price monitoring purposes of your competitor and your products.

- I made a Python web scraping guide for beginners I've been web scraping professionally for a few years and decided to make a series of web scraping tutorials that I wish I had when I started. The series will follow a large project I'm building that analyzes political rhetoric in the news.

- Before we begin, I want to point out that we’ll be scraping the old Reddit, not the new one. That’s because the new site loads more posts automatically when you scroll down: The problem is that it’s not possible to simulate this scroll-down action using a simple tool like Requests.

How to Scrape Prices

1. Create your own Price Monitoring Tool to Scrape Prices

There are plenty of web scraping tutorials on the internet where you can learn how to create your own price scraper to gather pricing from eCommerce websites. However, writing a new scraper for every different eCommerce site could get very expensive and tedious. Below we demonstrate some advanced techniques to build a basic web scraper that could scrape prices from any eCommerce page.

2. Web Scraping using Price Scraping Tools

Web scraping tools such as ScrapeHero Cloud can help you scrape prices without coding, downloading and learning how to use a tool. ScrapeHero Cloud has pre-built crawlers that can help you scrape popular eCommerce websites such as Amazon, Walmart, Target easily. ScrapeHero Cloud also has scraping APIs to help you scrape prices from Amazon and Walmart in real-time, web scraping APIs can help you get pricing details within seconds.

3. Custom Price Monitoring Solution

ScrapeHero Price Monitoring Solutions are cost-effective and can be built within weeks and in some cases days. Our price monitoring solution can easily be scaled to include multiple websites and/or products within a short span of time. We have considerable experience in handling all the challenges involved in price monitoring and have the sufficient know-how about the essentials of product monitoring.

Learn how to scrape prices for FREE –

How to Build a Price Scraper

In this tutorial, we will show you how to build a basic web scraper which will help you in scraping prices from eCommerce websites by taking a few common websites as an example.

Let’s start by taking a look at a few product pages, and identify certain design patterns on how product prices are displayed on the websites.

Amazon.com

Sephora.com

Observations and Patterns

Some patterns that we identified by looking at these product pages are:

- Price appears as currency figures (never as words)

- The price is the currency figure with the largest font size

- Price comes inside first 600 pixels height

- Usually the price comes above other currency figures

Of course, there could be exceptions to these observations, we’ll discuss how to deal with exceptions later in this article. We can combine these observations to create a fairly effective and generic crawler for scraping prices from eCommerce websites.

Implementation of a generic eCommerce scraper to scrape prices

Step 1: Installation

This tutorial uses the Google Chrome web browser. If you don’t have Google Chrome installed, you can follow the installation instructions.

Instead of Google Chrome, advanced developers can use a programmable version of Google Chrome called Puppeteer. This will remove the necessity of a running GUI application to run the scraper. However, that is beyond the scope of this tutorial.

Step 2: Chrome Developer Tools

The code presented in this tutorial is designed for scraping prices as simple as possible. Therefore, it will not be capable of fetching the price from every product page out there.

For now, we’ll visit an Amazon product page or a Sephora product page in Google Chrome.

- Visit the product page in Google Chrome

- Right-click anywhere on the page and select ‘Inspect Element’ to open up Chrome DevTools

- Click on the Console tab of DevTools

Inside the Console tab, you can enter any JavaScript code. The browser will execute the code in the context of the web page that has been loaded. You can learn more about DevTools using their official documentation.

Step 3: Run the JavaScript snippet

Copy the following JavaScript snippet and paste it into the console.

Ig dm. Press ‘Enter’ and you should now be seeing the price of the product displayed on the console.

If you don’t, then you have probably visited a product page which is an exception to our observations. This is completely normal, we’ll discuss how we can expand our script to cover more product pages of these kinds. You could try one of the sample pages provided in step 2.

The animated GIF below shows how we get the price from Amazon.com

How it works

First, we have to fetch all the HTML DOM elements in the page.

We need to convert each of these elements to simple JavaScript objects which stores their XY position values, text content and font size, which looks something like {'text':'Tennis Ball', 'fontSize':'14px', 'x':100,'y':200}. So we have to write a function for that, as follows.

Now, convert all the elements collected to JavaScript objects by applying our function on all elements using the JavaScript map function.

Remember the observations we made regarding how a price is displayed. We can now filter just those records which match our design observations. So we need a function that says whether a given record matches with our design observations.

We have used a Regular Expressionto check if a given text is a currency figure or not. You can modify this regular expression in case it doesn’t cover any web pages that you’re experimenting with.

Firefox 2 free download for mac. Now we can filter just the records that are possibly price records

Finally, as we’ve observed, the Price comes as the currency figure having the highest font size. If there are multiple currency figures with equally high font size, then Price probably corresponds to the one residing at a higher position. We are going to sort out our records based on these conditions, using the JavaScript sort<em><strong>function.

Now we just need to display it on the console

Taking it further

Moving to a GUI-less based scalable program

You can replace Google Chrome with a headless version of it called Puppeteer. Puppeteer is arguably the fastest option for headless web rendering. It works entirely based on the same ecosystem provided in Google Chrome. Once Puppeteer is set up, you can inject our script programmatically to the headless browser, and have the price returned to a function in your program. To learn more, visit our tutorial on Puppeteer.

Learn more: Web Scraping with Puppeteer and Node.Js

Improving and enhancing this script

You will quickly notice that some product pages will not work with such a script because they don’t follow the assumptions we have made about how the product price is displayed and the patterns we identified.

Unfortunately, there is no “holy grail” or a perfect solution to this problem. It is possible to generalize more web pages and identify more patterns and enhance this scraper.

A few suggestions for enhancements are:

- Figuring out more features, such as font-weight, font color, etc.

- Class names or IDs of the elements containing price would probably have the word price. You could figure out such other commonly occurring words.

- Currency figures with strike-through are probably regular prices, those could be ignored.

There could be pages that follow some of our design observations but violates some others. The snippet provided above strictly filters out elements that violate even one of the observations. In order to deal with this, you can try creating a score basedsystem. This would award points for following certain observations and penalize for violating certain observations. Those elements scoring above a particular threshold could be considered as price.

The next significant step that you would use to handle other pages is to employ Artificial Intelligence/Machine Learning based techniques. You can identify and classify patterns and automate the process to a larger degree this way. However, this field is an evolving field of study and we at ScrapeHero are using such techniques already with varying degrees of success.

If you need help to scrape prices from Amazon.com you can check out our tutorial specifically designed for Amazon.com:

Learn More:How to Scrape Prices from Amazon using Python

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data

Disclaimer:Any code provided in our tutorials is for illustration and learning purposes only. We are not responsible for how it is used and assume no liability for any detrimental usage of the source code. The mere presence of this code on our site does not imply that we encourage scraping or scrape the websites referenced in the code and accompanying tutorial. The tutorials only help illustrate the technique of programming web scrapers for popular internet websites. We are not obligated to provide any support for the code, however, if you add your questions in the comments section, we may periodically address them.

Thousands of new images are uploaded to Reddit every day.

Downloading every single image from your favorite subreddit could take hours of copy-pasting links and downloading files one by one.

A web scraper can easily help you scrape and download all images on a subreddit of your choice.

Web Scraping Images

To achieve our goal, we will use ParseHub, a free and powerful web scraper that can work with any website.

We will also use the free Tab Save Chrome browser extension. Make sure to get both tools set up before starting.

If you’re looking to scrape images from a different website, check out our guide on downloading images from any website.

Scraping Images from Reddit

Now, let’s get scraping.

- Open ParseHub and click on “New Project”. Enter the URL of the subreddit you will be scraping. The page will now be rendered inside the app. Make sure to use the old.reddit.com URL of the page for easier scraping.

NOTE: If you’re looking to scrape a private subreddit, check our guide on how to get past a login screen when web scraping. In this case, we will scrape images from the r/photographs subreddit.

- You can now make the first selection of your scraping job. Start by clicking on the title of the first post on the page. It will be highlighted in green to indicate that it has been selected. The rest of the posts will be highlighted in yellow.

- Click on the second post on the list to select them all. They will all now be highlighted in green. On the left sidebar, rename your selection to posts.

- ParseHub is now scraping information about each post on the page, including the thread link and title. In this case, we do not want this information. We only want direct links to the images. As a result, we will delete these extractions from our project. Do this by deleting both extract commands under your posts selection.

- Now, we will instruct ParseHub to click on each post and grab the URL of the image from each post. Start by clicking on the PLUS(+) sign next to your posts selection and choose the click command.

- A pop-up will appear asking you if this a “next page” button. Click on “no” and rename your new template to posts_template.

Web Scraping Tutorial

- Reddit will now open the first post on the list and let you select data to extract. In our case, our first post is a stickied post without an image. So we will open a new browser tab with a post that actually has an image in it.

- Now we will click on the image on the page in order to scrape its URL. This will create a new selection, rename it to image. Expand it using the icon next to its name and delete the “image” extraction, leaving only the “image_url” extraction.

Adding Pagination

Web Scraping Reddit Python

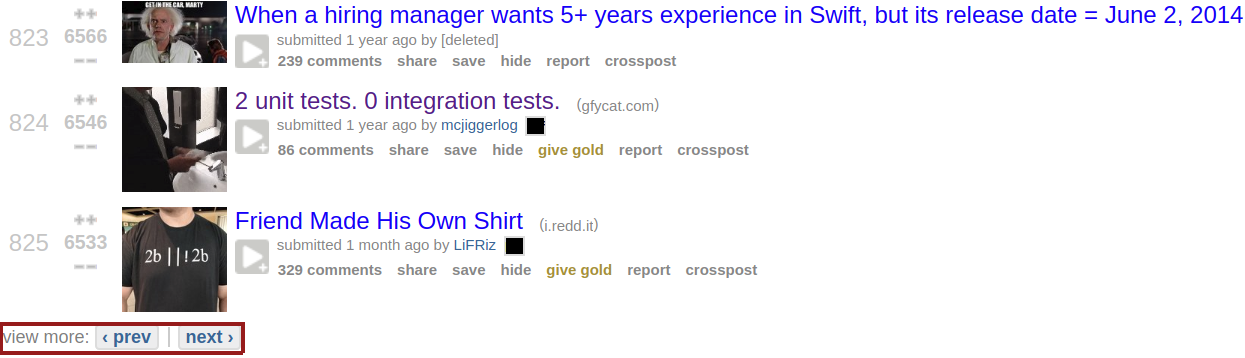

ParseHub is now extracting the image URLs from each post on the first page of the subreddit. We will now make ParseHub scrape additional pages of posts.

- Using the tabs at the top and the side of ParseHub return to the subreddit page and your main_template.

- Click on the PLUS(+) sign next to your page selection and choose the“select: command.

- Scroll all the way down to the bottom of the page and click on the “next” link. Rename your selection to “next”.

- Expand your next selection and remove both extractions under it.

- Use the PLUS(+) sign next to your next selection and add a “click” command.

- A pop-up will appear asking you if this a “next page” link. Click on Yes and enter the number of times you’d like to repeat this process. In this case, we will scrape 4 more pages.

Running your Scrape

It is now time to run your scrape and download the list of image URLs from each post.

Start by clicking on the green Get Data button on the left sidebar.

Reddit Web Scraping Jobs

Here you will be able to test, run, or schedule your web scraping project. In this case, we will run it right away.

Once your scrape is done, you will be able to download it as a CSV or JSON file.

Downloading Images from Reddit

Now it’s time to use your extracted list of URL to download all the images you’ve selected.

For this, we will use the Tab Save Chrome browser extension. Once you’ve added it to your browser, open it and use the edit button to enter the URLs you want to download (copy-paste them from your ParseHub export).

Web Scraping Reddit Free

Once you click on the download button, all images will be downloaded to your device. This might take a few minutes depending on how many images you’re downloading.

Web Scraping Reddit

Closing Thoughts

You now know how to download images from Reddit directly to your device.

If you want to scrape more data, check out our guide on how to scrape more data from Reddit, including users, upvotes, links, comments and more.